If you’re confused about noindex in robots.txt, why you should use robots.txt, and how to use this key file, this is the right guide for you. We’re going to look at the robots.txt no index, how we use it for technical SEO and much more.

Contents

What is a robots.txt file?

Robots.txt’s primary use is to:

- Control search crawler traffic

- Keep files off of search engines

For example, let’s assume that you have a PDF file on your site that you want users to sign up for and receive. You don’t want Google to crawl and index this file, so you might use a robots.txt no index to tell Google’s bot, or any search engine, that it shouldn’t index this file.

What is a robots txt file used for?

Robots.txt can be used for managing how search engines crawl and index your site. You can use this file for:

- Web pages, to try and keep a page off of a search engine’s index. However, Google notes that the URL may still be shown in search results, but no description will be available.

- Media files, keep images, audio, video and any other media files off of search result pages.

- Resource files can be blocked, such as your scripts and style files, although this is often not recommended.

Is a robots txt file necessary?

No. If you don’t create a robots.txt file, search crawlers will continue crawling your site. Think of this file as a permission file. If the file doesn’t exist, the crawler will assume that it can freely crawl your site.

How does a robots.txt file work?

We’re going to show you an example of a robots.txt file shortly, but it works simply by uploading it to the root folder of your website. Crawlers will search for the file and take care of the rest. However, you must be sure to name the file in lower case to be found.

Where does robots.txt go on a site?

When you upload the robots.txt file, you should place it in the root of your site. For example, you want the file to be accessible at: site.com/robots.txt.

What protocols are used in a robots.txt file?

Robots.txt uses quite a few protocols, but the main one is called Robots Exclusion Protocol. This is the most commonly used protocol with this file because it alerts robots as to which resources or files cannot be accessed.

Additionally, the Sitemaps protocol can also be used.

Inside of the file, you can also set user agents for more refinement when choosing which bots can and cannot access files, folders, or resources.

What is a user agent?

User agents help identify users on the Internet. Agents can be programs or persons, but most site users will only be concerned with programs. A search crawler is a program, and the user agent is the “name” of the bot crawling the site.

When you see “User-agent:*” this means that the rules that follow relate to all search engines and bots.

However, you can name specific bots, too, such as:

- Googlebot

- Bingbot

- Baiduspider

- Etc.

We’ll see an example of blocking certain bots in the example below.

How do ‘Disallow’ commands work in a robots.txt file?

If you know about noindex in robots.txt, you may be wondering what other disallow commands exist and how they work. Thankfully, this is simple:

- Disallow: /hideme/secret.html – blocks a specific file

- Disallow: /hideme/ – blocks an entire folder

- Disallow: / – blocks the entire site

Let’s take a look at an example of what robots.txt may look like.

Example robots.txt

Allow the entire site to be accessed:

User-agent: * Allow: /

Block the entire site from being accessed:

User-agent: * Disllow: /

Here is a simple robots.txt file with two rules:

User-agent: Googlebot Disallow: /nogooglebot/ User-agent: * Allow: / Sitemap: http://www.example.com/sitemap.xml

Keep in mind that a single file can be used to control multiple bots with the instructions below each User-Agent.

Technical robots.txt syntax

Syntax matters a lot when creating your file. The many items that you can include in your file are:

- User-agent: to name specific crawlers or use * for all bots

- Disallow: command to tell a bot which files not to crawl

- Allow: command to tell bots where to crawl

- Crawl-delay: set a delay, in seconds, to wait between crawling new pages

- Sitemap: a command to list the location of your sitemap(s)

Google has a word of caution for users:

Robots.txt vs meta robots vs x-robots

While many people use all three of these terms interchangeably, they’re all different. A robots.txt file is the actual text file that we covered in detail, but meta robots and x-robots are directives.

You can think of it this way:

- txt dictates crawl behavior

- Meta robots and x-robots are used to control indexation behavior

Google has a good explanation of blocking indexation:

Use the x-robots-tag HTTP header to block indexing of the robots.txt or sitemaps files. Also, if your robots.txt or sitemap file is ranking for normal queries (not site:), that’s usually a sign that your site is really bad off and should be improved instead. https://t.co/DpWz6sYanN

— 🐐 John 🐐 (@JohnMu) November 7, 2019

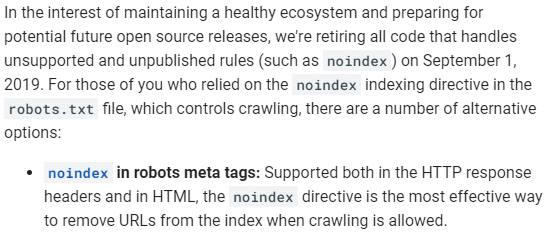

Note: noindex in robots txt is no longer supported in a robots.txt file and hasn’t been since 2019. Instead, you can set noindex in robots meta tags.

Google has the following to say about this:

Checking if you have a robots.txt file

If you’re unsure if you have a robots.txt file, log into your site using cPanel or an FTP client, and navigate to the root directory. Does the robots.txt file exist? If so, you have the file.

You can also just go to: yoursite.com/robots.txt.

How to create a robots.txt file

You can create the file on your PC and simply upload it to your site’s root. However, you can also find:

- WordPress plugins

- txt generators

- Etc.

SEO best practices

If you plan on using robots.txt, a few SEO practices to follow are:

- Verify that you’re not blocking important pages or folders from being crawled

- Links on pages that are blocked will not be followed, so link equity will not transfer

- Password protect or use noindex meta directives to prevent sensitive data from entering search results

- Submit your robots.txt to Google to ensure new, added rules are followed

Robots.txt is a powerful tool to block access to certain pieces of content or sections of your site. The guide above covers the basic steps you need to take to block access to files or grant access.